from __future__ import annotations

from functools import partial

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

from example_models.linear_chain import get_linear_chain_2v

from modelbase2 import Model, Simulator, npe, plot, scan

from modelbase2.distributions import LogNormal, Normal, sample

from modelbase2.surrogates import train_polynomial_surrogate, train_torch_surrogate

from modelbase2.types import AbstractSurrogate, unwrap

Mechanistic Learning¶

Mechanistic learning is the intersection of mechanistic modelling and machine learning.

modelbase currently supports two such approaches: surrogates and neural posterior estimation.

In the following we will mostly use the modelbase2.surrogates and modelbase2.npe modules to learn about both approaches.

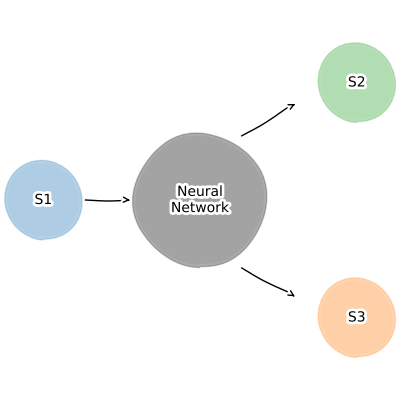

Surrogate models¶

Surrogate models replace whole parts of a mechanistic model (or even the entire model) with machine learning models.

This allows combining together multiple models of arbitrary size, without having to worry about the internal state of each model.

They are especially useful for improving the description of boundary effects, e.g. a dynamic description of downstream consumption.

We will start with a simple linear chain model

$$ \Large \varnothing \xrightarrow{v_1} x \xrightarrow{v_2} y \xrightarrow{v_3} \varnothing $$

where we want to read out the steady-state rate of $v_3$ dependent on the fixed concentration of $x$, while ignoring the inner state of the model.

$$ \Large x \xrightarrow{} ... \xrightarrow{v_3}$$

Since we need to fix a variable as an parameter, we can use the make_variable_static method to do that.

# Now "x" is a parameter

get_linear_chain_2v().make_variable_static("x").parameters

{'k1': 1.0, 'k2': 2.0, 'k3': 1.0, 'x': 1.0}

And we can already create a function to create a model, which will take our surrogate as an input.

def get_model_with_surrogate(surrogate: AbstractSurrogate) -> Model:

model = Model()

model.add_variables({"x": 1.0, "z": 0.0})

# Adding the surrogate

model.add_surrogate(

"surrogate",

surrogate,

args=["x"],

stoichiometries={

"v2": {"x": -1, "z": 1},

},

)

# Note that besides the surrogate we haven't defined any other reaction!

# We could have though

return model

Create data¶

The surrogates used in the following will all use the steady-state fluxes depending on the inputs.

We can thus create the necessary training data usign scan.steady_state.

Since this is usually a large amount of data, we recommend caching the results using Cache.

surrogate_features = pd.DataFrame({"x": np.geomspace(1e-12, 2.0, 21)})

surrogate_targets = scan.steady_state(

get_linear_chain_2v().make_variable_static("x"),

parameters=surrogate_features,

).fluxes.loc[:, ["v3"]]

# It's always a good idea to check the inputs and outputs

fig, (ax1, ax2) = plot.two_axes(figsize=(6, 3), sharex=False)

_ = plot.violins(surrogate_features, ax=ax1)[1].set(

title="Features", ylabel="Flux / a.u."

)

_ = plot.violins(surrogate_targets, ax=ax2)[1].set(

title="Targets", ylabel="Flux / a.u."

)

plt.show()

0%| | 0/21 [00:00<?, ?it/s]

24%|██▍ | 5/21 [00:00<00:00, 45.02it/s]

100%|██████████| 21/21 [00:00<00:00, 82.99it/s]

Polynomial surrogate¶

We can train our polynomial surrogate using train_polynomial_surrogate.

By default this will train polynomials for the degrees (1, 2, 3, 4, 5, 6, 7), but you can change that by using the degrees argument.

The function returns the trained surrogate and the training information for the different polynomial degrees.

Currently the polynomial surrogates are limited to a single feature and a single target

surrogate, info = train_polynomial_surrogate(

surrogate_features["x"],

surrogate_targets["v3"],

)

print("Model", surrogate.model, end="\n\n")

print(info["score"])

Model 2.0 + 2.0·(-1.0 + 1.0x) degree 1 2.0 2 4.0 3 6.0 4 8.0 5 10.0 6 12.0 7 14.0 Name: score, dtype: float64

You can then insert the surrogate into the model using the function we defined earlier

concs, fluxes = unwrap(

Simulator(get_model_with_surrogate(surrogate)).simulate(10).get_result()

)

fig, (ax1, ax2) = plot.two_axes(figsize=(8, 3))

plot.lines(concs, ax=ax1)

plot.lines(fluxes, ax=ax2)

ax1.set(xlabel="time / a.u.", ylabel="concentration / a.u.")

ax2.set(xlabel="time / a.u.", ylabel="flux / a.u.")

plt.show()

While polynomial regression can model nonlinear relationships between variables, it often struggles when the underlying relationship is more complex than a polynomial function.

You will learn about using neural networks in the next section.

Neural network surrogate using PyTorch¶

Neural networks are designed to capture highly complex and nonlinear relationships.

Through layers of neurons and activation functions, neural networks can learn intricate patterns that are not easily represented by e.g. a polynomial.

They have the flexibility to approximate any continuous function, given sufficient depth and appropriate training.

You can train a neural network surrogate based on the popular PyTorch library using train_torch_surrogate.

That function takes the features, targets and the number of epochs as inputs for it's training.

train_torch_surrogate returns the trained surrogate, as well as the training loss.

It is always a good idea to check whether that training loss approaches 0.

surrogate, loss = train_torch_surrogate(

features=surrogate_features,

targets=surrogate_targets,

epochs=250,

)

ax = loss.plot(ax=plt.subplots(figsize=(4, 2.5))[1])

ax.set_ylim(0, None)

plt.show()

0%| | 0/250 [00:00<?, ?it/s]

57%|█████▋ | 142/250 [00:00<00:00, 1412.80it/s]

100%|██████████| 250/250 [00:00<00:00, 1457.92it/s]

As before, you can then insert the surrogate into the model using the function we defined earlier

concs, fluxes = unwrap(

Simulator(get_model_with_surrogate(surrogate)).simulate(10).get_result()

)

fig, (ax1, ax2) = plot.two_axes(figsize=(8, 3))

plot.lines(concs, ax=ax1)

plot.lines(fluxes, ax=ax2)

ax1.set(xlabel="time / a.u.", ylabel="concentration / a.u.")

ax2.set(xlabel="time / a.u.", ylabel="flux / a.u.")

plt.show()

Troubleshooting¶

It often can make sense to check specific predictions of the surrogate.

For example, what does it predict when the inputs are all 0?

print(surrogate.predict(np.array([-0.1])))

print(surrogate.predict(np.array([0.0])))

print(surrogate.predict(np.array([0.1])))

{'v2': np.float32(-0.009848562)}

{'v2': np.float32(7.374515e-05)}

{'v2': np.float32(0.1986947)}

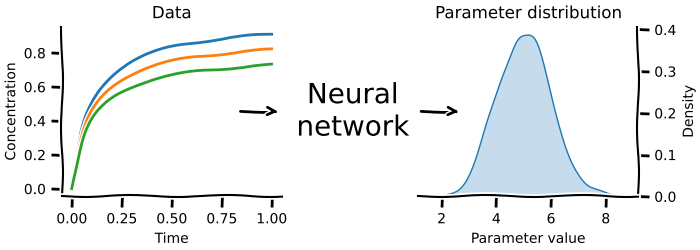

Neural posterior estimation¶

Neural posterior estimation answers the question: what parameters could have generated the data I measured?

Here you use an ODE model and prior knowledge about the parameters of interest to create synthetic data.

You then use the generated synthetic data as the features and the input parameters as the targets to train an inverse problem.

Once that training is successful, the neural network can now predict the input parameters for real world data.

You can use this technique for both steady-state as well as time course data.

The only difference is in using scan.time_course.

Take care here to save the targets as well in case you use cached data :)

# Note that now the parameters are the targets

npe_targets = sample(

{

"k1": LogNormal(mean=1.0, sigma=0.3),

},

n=1_000,

)

# And the generated data are the features

npe_features = scan.steady_state(

get_linear_chain_2v(),

parameters=npe_targets,

).results.loc[:, ["y", "v2", "v3"]]

# It's always a good idea to check the inputs and outputs

fig, (ax1, ax2) = plot.two_axes(figsize=(6, 3), sharex=False)

_ = plot.violins(npe_features, ax=ax1)[1].set(title="Features", ylabel="Flux / a.u.")

_ = plot.violins(npe_targets, ax=ax2)[1].set(title="Targets", ylabel="Flux / a.u.")

plt.show()

0%| | 0/1000 [00:00<?, ?it/s]

1%| | 6/1000 [00:00<00:16, 59.34it/s]

2%|▏ | 21/1000 [00:00<00:08, 109.25it/s]

4%|▎ | 37/1000 [00:00<00:07, 129.49it/s]

5%|▌ | 52/1000 [00:00<00:07, 134.86it/s]

7%|▋ | 68/1000 [00:00<00:06, 141.41it/s]

8%|▊ | 84/1000 [00:00<00:06, 146.40it/s]

10%|█ | 100/1000 [00:00<00:06, 149.41it/s]

12%|█▏ | 115/1000 [00:00<00:05, 148.26it/s]

13%|█▎ | 131/1000 [00:00<00:05, 150.47it/s]

15%|█▍ | 147/1000 [00:01<00:05, 152.84it/s]

16%|█▋ | 163/1000 [00:01<00:05, 151.30it/s]

18%|█▊ | 180/1000 [00:01<00:05, 153.00it/s]

20%|█▉ | 196/1000 [00:01<00:05, 152.59it/s]

21%|██ | 212/1000 [00:01<00:05, 153.57it/s]

23%|██▎ | 228/1000 [00:01<00:05, 152.03it/s]

24%|██▍ | 245/1000 [00:01<00:04, 156.21it/s]

26%|██▌ | 261/1000 [00:01<00:04, 153.16it/s]

28%|██▊ | 277/1000 [00:01<00:04, 154.60it/s]

29%|██▉ | 293/1000 [00:01<00:04, 152.35it/s]

31%|███ | 309/1000 [00:02<00:04, 150.10it/s]

32%|███▎ | 325/1000 [00:02<00:04, 152.00it/s]

34%|███▍ | 341/1000 [00:02<00:04, 153.44it/s]

36%|███▌ | 357/1000 [00:02<00:04, 153.16it/s]

37%|███▋ | 373/1000 [00:02<00:04, 153.27it/s]

39%|███▉ | 389/1000 [00:02<00:04, 151.33it/s]

40%|████ | 405/1000 [00:02<00:03, 150.51it/s]

42%|████▏ | 421/1000 [00:02<00:03, 149.67it/s]

44%|████▎ | 436/1000 [00:02<00:03, 149.48it/s]

45%|████▌ | 451/1000 [00:03<00:03, 147.71it/s]

47%|████▋ | 467/1000 [00:03<00:03, 150.76it/s]

48%|████▊ | 483/1000 [00:03<00:03, 151.79it/s]

50%|████▉ | 499/1000 [00:03<00:03, 153.51it/s]

52%|█████▏ | 515/1000 [00:03<00:03, 152.67it/s]

53%|█████▎ | 531/1000 [00:03<00:03, 150.58it/s]

55%|█████▍ | 547/1000 [00:03<00:02, 151.32it/s]

56%|█████▋ | 563/1000 [00:03<00:02, 153.25it/s]

58%|█████▊ | 579/1000 [00:03<00:02, 153.03it/s]

60%|█████▉ | 595/1000 [00:03<00:02, 150.65it/s]

61%|██████ | 611/1000 [00:04<00:02, 149.94it/s]

63%|██████▎ | 627/1000 [00:04<00:02, 151.35it/s]

64%|██████▍ | 643/1000 [00:04<00:02, 147.35it/s]

66%|██████▌ | 659/1000 [00:04<00:02, 149.59it/s]

68%|██████▊ | 675/1000 [00:04<00:02, 151.17it/s]

69%|██████▉ | 691/1000 [00:04<00:02, 149.44it/s]

71%|███████ | 708/1000 [00:04<00:01, 154.57it/s]

72%|███████▏ | 724/1000 [00:04<00:01, 155.88it/s]

74%|███████▍ | 740/1000 [00:04<00:01, 147.05it/s]

76%|███████▌ | 758/1000 [00:05<00:01, 150.05it/s]

77%|███████▋ | 774/1000 [00:05<00:01, 148.45it/s]

79%|███████▉ | 790/1000 [00:05<00:01, 151.05it/s]

81%|████████ | 806/1000 [00:05<00:01, 152.17it/s]

82%|████████▏ | 822/1000 [00:05<00:01, 150.12it/s]

84%|████████▍ | 839/1000 [00:05<00:01, 155.06it/s]

86%|████████▌ | 855/1000 [00:05<00:00, 151.74it/s]

87%|████████▋ | 871/1000 [00:05<00:00, 154.01it/s]

89%|████████▉ | 888/1000 [00:05<00:00, 154.44it/s]

90%|█████████ | 904/1000 [00:06<00:00, 150.93it/s]

92%|█████████▏| 920/1000 [00:06<00:00, 149.98it/s]

94%|█████████▎| 936/1000 [00:06<00:00, 150.96it/s]

95%|█████████▌| 952/1000 [00:06<00:00, 148.69it/s]

97%|█████████▋| 968/1000 [00:06<00:00, 148.59it/s]

98%|█████████▊| 984/1000 [00:06<00:00, 150.37it/s]

100%|██████████| 1000/1000 [00:06<00:00, 147.74it/s]

Train NPE¶

You can then train your neural posterior estimator using npe.train_torch_ss_estimator (or npe.train_torch_time_course_estimator if you have time course data).

estimator, losses = npe.train_torch_ss_estimator(

features=npe_features,

targets=npe_targets,

epochs=1000,

)

ax = losses.plot(figsize=(4, 2.5))

ax.set(xlabel="epoch", ylabel="loss")

ax.set_ylim(0, None)

plt.show()

0%| | 0/1000 [00:00<?, ?it/s]

13%|█▎ | 133/1000 [00:00<00:00, 1321.35it/s]

27%|██▋ | 266/1000 [00:00<00:00, 1305.73it/s]

40%|███▉ | 399/1000 [00:00<00:00, 1315.28it/s]

53%|█████▎ | 531/1000 [00:00<00:00, 1314.11it/s]

66%|██████▋ | 663/1000 [00:00<00:00, 1309.41it/s]

80%|███████▉ | 797/1000 [00:00<00:00, 1317.36it/s]

93%|█████████▎| 929/1000 [00:00<00:00, 1309.73it/s]

100%|██████████| 1000/1000 [00:00<00:00, 1310.31it/s]

Sanity check: do prior and posterior match?¶

fig, (ax1, ax2) = plot.two_axes(figsize=(6, 2))

ax = sns.kdeplot(npe_targets, fill=True, ax=ax1)

ax.set_title("Prior")

posterior = estimator.predict(npe_features)

ax = sns.kdeplot(posterior, fill=True, ax=ax2)

ax.set_title("Posterior")

plt.show()

First finish line

With that you now know most of what you will need from a day-to-day basis about labelled models in modelbase2.Congratulations!

Customizing training¶

from typing import TYPE_CHECKING

from modelbase2 import LinearLabelMapper, Simulator

from modelbase2.distributions import sample

from modelbase2.fns import michaelis_menten_1s

from modelbase2.parallel import parallelise

if TYPE_CHECKING:

from modelbase2.npe import AbstractEstimator

# FIXME: todo

# Show how to change Adam settings or user other optimizers

# Show how to change the surrogate network

Label NPE¶

def get_closed_cycle() -> tuple[Model, dict[str, int], dict[str, list[int]]]:

"""

| Reaction | Labelmap |

| -------------- | -------- |

| x1 ->[v1] x2 | [0, 1] |

| x2 ->[v2a] x3 | [0, 1] |

| x2 ->[v2b] x3 | [1, 0] |

| x3 ->[v3] x1 | [0, 1] |

"""

model = (

Model()

.add_parameters(

{

"vmax_1": 1.0,

"km_1": 0.5,

"vmax_2a": 1.0,

"vmax_2b": 1.0,

"km_2": 0.5,

"vmax_3": 1.0,

"km_3": 0.5,

}

)

.add_variables({"x1": 1.0, "x2": 0.0, "x3": 0.0})

.add_reaction(

"v1",

michaelis_menten_1s,

stoichiometry={"x1": -1, "x2": 1},

args=["x1", "vmax_1", "km_1"],

)

.add_reaction(

"v2a",

michaelis_menten_1s,

stoichiometry={"x2": -1, "x3": 1},

args=["x2", "vmax_2a", "km_2"],

)

.add_reaction(

"v2b",

michaelis_menten_1s,

stoichiometry={"x2": -1, "x3": 1},

args=["x2", "vmax_2b", "km_2"],

)

.add_reaction(

"v3",

michaelis_menten_1s,

stoichiometry={"x3": -1, "x1": 1},

args=["x3", "vmax_3", "km_3"],

)

)

label_variables: dict[str, int] = {"x1": 2, "x2": 2, "x3": 2}

label_maps: dict[str, list[int]] = {

"v1": [0, 1],

"v2a": [0, 1],

"v2b": [1, 0],

"v3": [0, 1],

}

return model, label_variables, label_maps

def _worker(

x: tuple[tuple[int, pd.Series], tuple[int, pd.Series]],

mapper: LinearLabelMapper,

time: float,

initial_labels: dict[str, int | list[int]],

) -> pd.Series:

(_, y_ss), (_, v_ss) = x

return unwrap(

Simulator(mapper.build_model(y_ss, v_ss, initial_labels=initial_labels))

.simulate(time)

.get_result()

).variables.iloc[-1]

def get_label_distribution_at_time(

model: Model,

label_variables: dict[str, int],

label_maps: dict[str, list[int]],

time: float,

initial_labels: dict[str, int | list[int]],

ss_concs: pd.DataFrame,

ss_fluxes: pd.DataFrame,

) -> pd.DataFrame:

mapper = LinearLabelMapper(

model,

label_variables=label_variables,

label_maps=label_maps,

)

return pd.DataFrame(

parallelise(

partial(_worker, mapper=mapper, time=time, initial_labels=initial_labels),

inputs=list(

enumerate(zip(ss_concs.iterrows(), ss_fluxes.iterrows(), strict=True))

),

cache=None,

)

).T

def inverse_parameter_elasticity(

estimator: AbstractEstimator,

datum: pd.Series,

*,

normalized: bool = True,

displacement: float = 1e-4,

) -> pd.DataFrame:

ref = estimator.predict(datum).iloc[0, :]

coefs = {}

for name, value in datum.items():

up = coefs[name] = estimator.predict(

pd.Series(datum.to_dict() | {name: value * 1 + displacement})

).iloc[0, :]

down = coefs[name] = estimator.predict(

pd.Series(datum.to_dict() | {name: value * 1 - displacement})

).iloc[0, :]

coefs[name] = (up - down) / (2 * displacement * value)

coefs = pd.DataFrame(coefs)

if normalized:

coefs *= datum / ref.to_numpy()

return coefs

model, label_variables, label_maps = get_closed_cycle()

ss_concs, ss_fluxes = unwrap(

Simulator(model)

.update_parameters({"vmax_2a": 1.0, "vmax_2b": 0.5})

.simulate_to_steady_state()

.get_result()

)

mapper = LinearLabelMapper(

model,

label_variables=label_variables,

label_maps=label_maps,

)

_, axs = plot.relative_label_distribution(

mapper,

unwrap(

Simulator(

mapper.build_model(

ss_concs.iloc[-1], ss_fluxes.iloc[-1], initial_labels={"x1": 0}

)

)

.simulate(5)

.get_result()

).variables,

sharey=True,

n_cols=3,

)

axs[0].set_ylabel("Relative label distribution")

axs[1].set_xlabel("Time / s")

plt.show()

surrogate_targets = sample(

{

"vmax_2b": Normal(0.5, 0.1),

},

n=1000,

).clip(lower=0)

ax = sns.kdeplot(surrogate_targets, fill=True)

ax.set_title("Prior")

Text(0.5, 1.0, 'Prior')

ss_concs, ss_fluxes = scan.steady_state(model, parameters=surrogate_targets)

0%| | 0/1000 [00:00<?, ?it/s]

0%| | 2/1000 [00:00<00:51, 19.27it/s]

1%| | 11/1000 [00:00<00:16, 58.65it/s]

2%|▏ | 19/1000 [00:00<00:14, 68.08it/s]

3%|▎ | 28/1000 [00:00<00:12, 76.60it/s]

4%|▎ | 36/1000 [00:00<00:12, 77.02it/s]

4%|▍ | 44/1000 [00:00<00:13, 71.38it/s]

5%|▌ | 53/1000 [00:00<00:12, 76.71it/s]

6%|▌ | 62/1000 [00:00<00:11, 79.15it/s]

7%|▋ | 70/1000 [00:00<00:11, 78.96it/s]

8%|▊ | 79/1000 [00:01<00:11, 76.96it/s]

9%|▉ | 88/1000 [00:01<00:11, 79.72it/s]

10%|▉ | 97/1000 [00:01<00:11, 80.44it/s]

11%|█ | 106/1000 [00:01<00:11, 78.91it/s]

11%|█▏ | 114/1000 [00:01<00:11, 79.11it/s]

12%|█▏ | 123/1000 [00:01<00:10, 80.90it/s]

13%|█▎ | 132/1000 [00:01<00:10, 80.40it/s]

14%|█▍ | 141/1000 [00:01<00:10, 81.53it/s]

15%|█▌ | 150/1000 [00:01<00:10, 79.64it/s]

16%|█▌ | 160/1000 [00:02<00:10, 80.02it/s]

17%|█▋ | 169/1000 [00:02<00:10, 80.13it/s]

18%|█▊ | 178/1000 [00:02<00:10, 81.69it/s]

19%|█▊ | 187/1000 [00:02<00:09, 82.85it/s]

20%|█▉ | 196/1000 [00:02<00:10, 76.67it/s]

20%|██ | 205/1000 [00:02<00:09, 80.09it/s]

22%|██▏ | 215/1000 [00:02<00:09, 83.01it/s]

22%|██▏ | 224/1000 [00:02<00:09, 78.48it/s]

23%|██▎ | 233/1000 [00:02<00:09, 79.86it/s]

24%|██▍ | 242/1000 [00:03<00:09, 82.40it/s]

25%|██▌ | 251/1000 [00:03<00:08, 83.36it/s]

26%|██▌ | 260/1000 [00:03<00:09, 77.13it/s]

27%|██▋ | 268/1000 [00:03<00:09, 77.20it/s]

28%|██▊ | 280/1000 [00:03<00:09, 79.55it/s]

29%|██▉ | 289/1000 [00:03<00:08, 81.69it/s]

30%|███ | 300/1000 [00:03<00:08, 80.45it/s]

31%|███ | 310/1000 [00:03<00:08, 84.74it/s]

32%|███▏ | 319/1000 [00:04<00:07, 85.89it/s]

33%|███▎ | 328/1000 [00:04<00:08, 78.37it/s]

34%|███▎ | 337/1000 [00:04<00:08, 80.82it/s]

35%|███▍ | 346/1000 [00:04<00:07, 81.94it/s]

36%|███▌ | 355/1000 [00:04<00:07, 82.13it/s]

36%|███▋ | 364/1000 [00:04<00:08, 77.69it/s]

37%|███▋ | 372/1000 [00:04<00:08, 78.28it/s]

38%|███▊ | 380/1000 [00:04<00:07, 78.51it/s]

39%|███▉ | 388/1000 [00:04<00:07, 78.67it/s]

40%|███▉ | 396/1000 [00:05<00:07, 78.20it/s]

41%|████ | 408/1000 [00:05<00:07, 80.66it/s]

42%|████▏ | 417/1000 [00:05<00:07, 83.03it/s]

43%|████▎ | 427/1000 [00:05<00:06, 83.54it/s]

44%|████▎ | 436/1000 [00:05<00:07, 80.49it/s]

44%|████▍ | 445/1000 [00:05<00:06, 82.76it/s]

45%|████▌ | 454/1000 [00:05<00:06, 84.10it/s]

46%|████▋ | 463/1000 [00:05<00:06, 80.56it/s]

47%|████▋ | 472/1000 [00:05<00:06, 79.41it/s]

48%|████▊ | 481/1000 [00:06<00:06, 81.26it/s]

49%|████▉ | 490/1000 [00:06<00:06, 81.41it/s]

50%|████▉ | 499/1000 [00:06<00:06, 80.67it/s]

51%|█████ | 508/1000 [00:06<00:06, 79.55it/s]

52%|█████▏ | 517/1000 [00:06<00:06, 78.25it/s]

53%|█████▎ | 526/1000 [00:06<00:05, 80.67it/s]

54%|█████▎ | 535/1000 [00:06<00:05, 82.35it/s]

54%|█████▍ | 544/1000 [00:06<00:05, 80.14it/s]

55%|█████▌ | 553/1000 [00:06<00:05, 78.85it/s]

56%|█████▌ | 562/1000 [00:07<00:05, 81.26it/s]

57%|█████▋ | 571/1000 [00:07<00:05, 82.08it/s]

58%|█████▊ | 580/1000 [00:07<00:05, 78.41it/s]

59%|█████▉ | 588/1000 [00:07<00:05, 78.32it/s]

60%|█████▉ | 596/1000 [00:07<00:05, 78.36it/s]

60%|██████ | 604/1000 [00:07<00:05, 78.76it/s]

61%|██████▏ | 613/1000 [00:07<00:04, 80.80it/s]

62%|██████▏ | 622/1000 [00:07<00:04, 82.15it/s]

63%|██████▎ | 631/1000 [00:07<00:04, 81.98it/s]

64%|██████▍ | 640/1000 [00:08<00:04, 78.90it/s]

65%|██████▍ | 648/1000 [00:08<00:04, 78.20it/s]

66%|██████▌ | 658/1000 [00:08<00:04, 82.34it/s]

67%|██████▋ | 667/1000 [00:08<00:04, 77.80it/s]

68%|██████▊ | 675/1000 [00:08<00:04, 74.35it/s]

68%|██████▊ | 685/1000 [00:08<00:03, 78.95it/s]

69%|██████▉ | 694/1000 [00:08<00:03, 80.25it/s]

70%|███████ | 703/1000 [00:08<00:03, 80.21it/s]

71%|███████ | 712/1000 [00:08<00:03, 79.70it/s]

72%|███████▏ | 720/1000 [00:09<00:03, 79.40it/s]

73%|███████▎ | 728/1000 [00:09<00:03, 79.30it/s]

74%|███████▍ | 738/1000 [00:09<00:03, 84.12it/s]

75%|███████▍ | 747/1000 [00:09<00:03, 82.18it/s]

76%|███████▌ | 756/1000 [00:09<00:03, 78.87it/s]

76%|███████▋ | 765/1000 [00:09<00:02, 81.65it/s]

77%|███████▋ | 774/1000 [00:09<00:02, 82.03it/s]

78%|███████▊ | 783/1000 [00:09<00:02, 77.33it/s]

79%|███████▉ | 791/1000 [00:09<00:02, 77.83it/s]

80%|███████▉ | 799/1000 [00:10<00:02, 77.96it/s]

81%|████████ | 810/1000 [00:10<00:02, 86.38it/s]

82%|████████▏ | 819/1000 [00:10<00:02, 78.90it/s]

83%|████████▎ | 828/1000 [00:10<00:02, 81.78it/s]

84%|████████▎ | 837/1000 [00:10<00:02, 79.35it/s]

85%|████████▍ | 846/1000 [00:10<00:01, 82.02it/s]

86%|████████▌ | 855/1000 [00:10<00:01, 81.98it/s]

86%|████████▋ | 864/1000 [00:10<00:01, 77.85it/s]

87%|████████▋ | 874/1000 [00:10<00:01, 83.73it/s]

88%|████████▊ | 883/1000 [00:11<00:01, 82.42it/s]

89%|████████▉ | 892/1000 [00:11<00:01, 79.12it/s]

90%|█████████ | 901/1000 [00:11<00:01, 81.03it/s]

91%|█████████ | 911/1000 [00:11<00:01, 83.08it/s]

92%|█████████▏| 920/1000 [00:11<00:00, 80.60it/s]

93%|█████████▎| 929/1000 [00:11<00:00, 80.78it/s]

94%|█████████▍| 938/1000 [00:11<00:00, 80.32it/s]

95%|█████████▍| 947/1000 [00:11<00:00, 76.23it/s]

96%|█████████▌| 957/1000 [00:11<00:00, 80.41it/s]

97%|█████████▋| 966/1000 [00:12<00:00, 82.15it/s]

98%|█████████▊| 975/1000 [00:12<00:00, 77.57it/s]

98%|█████████▊| 984/1000 [00:12<00:00, 80.76it/s]

99%|█████████▉| 993/1000 [00:12<00:00, 80.85it/s]

100%|██████████| 1000/1000 [00:12<00:00, 79.45it/s]

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 4))

_, ax = plot.violins(ss_concs, ax=ax1)

ax.set_ylabel("Concentration / a.u.")

_, ax = plot.violins(ss_fluxes, ax=ax2)

ax.set_ylabel("Flux / a.u.")

Text(0, 0.5, 'Flux / a.u.')

surrogate_features = get_label_distribution_at_time(

model=model,

label_variables=label_variables,

label_maps=label_maps,

time=5,

ss_concs=ss_concs,

ss_fluxes=ss_fluxes,

initial_labels={"x1": 0},

)

_, ax = plot.violins(surrogate_features)

ax.set_ylabel("Relative label distribution")

0%| | 0/1000 [00:00<?, ?it/s]

0%| | 1/1000 [00:00<01:53, 8.80it/s]

1%| | 9/1000 [00:00<00:22, 43.86it/s]

2%|▏ | 17/1000 [00:00<00:17, 55.17it/s]

2%|▎ | 25/1000 [00:00<00:16, 60.40it/s]

3%|▎ | 33/1000 [00:00<00:15, 62.84it/s]

4%|▍ | 41/1000 [00:00<00:15, 60.01it/s]

5%|▍ | 49/1000 [00:00<00:14, 63.43it/s]

6%|▌ | 57/1000 [00:00<00:14, 66.16it/s]

6%|▋ | 65/1000 [00:01<00:14, 66.03it/s]

7%|▋ | 73/1000 [00:01<00:14, 65.75it/s]

8%|▊ | 81/1000 [00:01<00:13, 65.83it/s]

9%|▉ | 89/1000 [00:01<00:13, 66.57it/s]

10%|▉ | 97/1000 [00:01<00:13, 66.60it/s]

10%|█ | 104/1000 [00:01<00:13, 67.23it/s]

11%|█ | 112/1000 [00:01<00:14, 63.14it/s]

12%|█▏ | 120/1000 [00:01<00:13, 65.68it/s]

13%|█▎ | 128/1000 [00:02<00:13, 65.97it/s]

14%|█▎ | 136/1000 [00:02<00:13, 65.59it/s]

14%|█▍ | 144/1000 [00:02<00:12, 66.41it/s]

15%|█▌ | 152/1000 [00:02<00:12, 66.52it/s]

16%|█▌ | 160/1000 [00:02<00:12, 66.78it/s]

17%|█▋ | 168/1000 [00:02<00:12, 66.36it/s]

18%|█▊ | 176/1000 [00:02<00:12, 65.84it/s]

18%|█▊ | 183/1000 [00:02<00:12, 66.90it/s]

19%|█▉ | 191/1000 [00:02<00:12, 66.00it/s]

20%|█▉ | 199/1000 [00:03<00:12, 64.68it/s]

21%|██ | 207/1000 [00:03<00:11, 66.54it/s]

22%|██▏ | 215/1000 [00:03<00:11, 66.11it/s]

22%|██▏ | 223/1000 [00:03<00:11, 67.75it/s]

23%|██▎ | 231/1000 [00:03<00:11, 67.67it/s]

24%|██▍ | 239/1000 [00:03<00:11, 67.92it/s]

25%|██▍ | 247/1000 [00:03<00:11, 67.48it/s]

26%|██▌ | 255/1000 [00:03<00:10, 67.90it/s]

26%|██▋ | 263/1000 [00:04<00:11, 66.58it/s]

27%|██▋ | 271/1000 [00:04<00:11, 66.12it/s]

28%|██▊ | 279/1000 [00:04<00:10, 65.96it/s]

29%|██▊ | 287/1000 [00:04<00:10, 66.31it/s]

30%|██▉ | 295/1000 [00:04<00:10, 65.65it/s]

30%|███ | 303/1000 [00:04<00:10, 65.48it/s]

31%|███ | 311/1000 [00:04<00:10, 65.74it/s]

32%|███▏ | 319/1000 [00:04<00:10, 65.48it/s]

33%|███▎ | 327/1000 [00:05<00:10, 65.25it/s]

34%|███▎ | 335/1000 [00:05<00:10, 65.33it/s]

34%|███▍ | 343/1000 [00:05<00:10, 64.33it/s]

35%|███▌ | 351/1000 [00:05<00:09, 65.97it/s]

36%|███▌ | 359/1000 [00:05<00:09, 66.13it/s]

37%|███▋ | 367/1000 [00:05<00:09, 66.77it/s]

38%|███▊ | 375/1000 [00:05<00:09, 66.58it/s]

38%|███▊ | 383/1000 [00:05<00:09, 65.85it/s]

39%|███▉ | 391/1000 [00:06<00:09, 65.93it/s]

40%|███▉ | 399/1000 [00:06<00:09, 65.25it/s]

41%|████ | 407/1000 [00:06<00:09, 64.95it/s]

42%|████▏ | 415/1000 [00:06<00:09, 64.99it/s]

42%|████▏ | 423/1000 [00:06<00:08, 64.50it/s]

43%|████▎ | 431/1000 [00:06<00:08, 64.32it/s]

44%|████▍ | 439/1000 [00:06<00:08, 65.15it/s]

45%|████▍ | 447/1000 [00:06<00:08, 65.18it/s]

45%|████▌ | 454/1000 [00:06<00:08, 66.29it/s]

46%|████▌ | 462/1000 [00:07<00:08, 62.25it/s]

47%|████▋ | 470/1000 [00:07<00:08, 63.97it/s]

48%|████▊ | 478/1000 [00:07<00:08, 65.05it/s]

49%|████▊ | 486/1000 [00:07<00:07, 65.56it/s]

49%|████▉ | 494/1000 [00:07<00:07, 66.80it/s]

50%|█████ | 502/1000 [00:07<00:07, 67.67it/s]

51%|█████ | 510/1000 [00:07<00:07, 67.90it/s]

52%|█████▏ | 517/1000 [00:07<00:07, 67.77it/s]

52%|█████▎ | 525/1000 [00:08<00:07, 67.81it/s]

53%|█████▎ | 532/1000 [00:08<00:07, 64.66it/s]

54%|█████▍ | 540/1000 [00:08<00:06, 65.88it/s]

55%|█████▍ | 548/1000 [00:08<00:06, 66.51it/s]

56%|█████▌ | 556/1000 [00:08<00:06, 67.27it/s]

56%|█████▋ | 563/1000 [00:08<00:06, 67.89it/s]

57%|█████▋ | 570/1000 [00:08<00:06, 65.66it/s]

58%|█████▊ | 578/1000 [00:08<00:06, 66.30it/s]

59%|█████▊ | 586/1000 [00:08<00:06, 66.98it/s]

59%|█████▉ | 594/1000 [00:09<00:06, 67.19it/s]

60%|██████ | 602/1000 [00:09<00:05, 66.58it/s]

61%|██████ | 609/1000 [00:09<00:06, 63.70it/s]

62%|██████▏ | 617/1000 [00:09<00:06, 63.75it/s]

62%|██████▎ | 625/1000 [00:09<00:05, 64.98it/s]

63%|██████▎ | 632/1000 [00:09<00:05, 66.17it/s]

64%|██████▍ | 640/1000 [00:09<00:05, 65.84it/s]

65%|██████▍ | 648/1000 [00:09<00:05, 65.98it/s]

66%|██████▌ | 656/1000 [00:10<00:05, 66.17it/s]

66%|██████▋ | 664/1000 [00:10<00:05, 65.20it/s]

67%|██████▋ | 672/1000 [00:10<00:05, 62.47it/s]

68%|██████▊ | 681/1000 [00:10<00:04, 64.78it/s]

69%|██████▉ | 689/1000 [00:10<00:04, 66.15it/s]

70%|██████▉ | 696/1000 [00:10<00:04, 66.01it/s]

70%|███████ | 704/1000 [00:10<00:04, 66.33it/s]

71%|███████ | 712/1000 [00:10<00:04, 66.06it/s]

72%|███████▏ | 720/1000 [00:11<00:04, 66.01it/s]

73%|███████▎ | 728/1000 [00:11<00:04, 65.33it/s]

74%|███████▎ | 736/1000 [00:11<00:04, 65.09it/s]

74%|███████▍ | 744/1000 [00:11<00:03, 66.74it/s]

75%|███████▌ | 751/1000 [00:11<00:03, 65.27it/s]

76%|███████▌ | 759/1000 [00:11<00:03, 63.89it/s]

77%|███████▋ | 767/1000 [00:11<00:03, 65.09it/s]

78%|███████▊ | 775/1000 [00:11<00:03, 65.61it/s]

78%|███████▊ | 783/1000 [00:11<00:03, 66.61it/s]

79%|███████▉ | 790/1000 [00:12<00:03, 66.88it/s]

80%|███████▉ | 798/1000 [00:12<00:03, 66.36it/s]

81%|████████ | 806/1000 [00:12<00:02, 66.25it/s]

81%|████████▏ | 813/1000 [00:12<00:02, 67.25it/s]

82%|████████▏ | 820/1000 [00:12<00:02, 67.16it/s]

83%|████████▎ | 827/1000 [00:12<00:02, 62.30it/s]

84%|████████▎ | 835/1000 [00:12<00:02, 63.05it/s]

84%|████████▍ | 843/1000 [00:12<00:02, 64.10it/s]

85%|████████▌ | 851/1000 [00:13<00:02, 66.17it/s]

86%|████████▌ | 859/1000 [00:13<00:02, 66.36it/s]

87%|████████▋ | 867/1000 [00:13<00:01, 66.65it/s]

88%|████████▊ | 875/1000 [00:13<00:01, 66.99it/s]

88%|████████▊ | 883/1000 [00:13<00:01, 67.40it/s]

89%|████████▉ | 890/1000 [00:13<00:01, 67.00it/s]

90%|████████▉ | 897/1000 [00:13<00:01, 66.86it/s]

90%|█████████ | 904/1000 [00:13<00:01, 66.02it/s]

91%|█████████ | 911/1000 [00:13<00:01, 64.29it/s]

92%|█████████▏| 918/1000 [00:14<00:01, 65.19it/s]

92%|█████████▎| 925/1000 [00:14<00:01, 65.26it/s]

93%|█████████▎| 933/1000 [00:14<00:01, 65.83it/s]

94%|█████████▍| 940/1000 [00:14<00:00, 66.42it/s]

95%|█████████▍| 947/1000 [00:14<00:00, 65.93it/s]

95%|█████████▌| 954/1000 [00:14<00:00, 66.25it/s]

96%|█████████▌| 961/1000 [00:14<00:00, 66.52it/s]

97%|█████████▋| 968/1000 [00:14<00:00, 65.35it/s]

98%|█████████▊| 975/1000 [00:14<00:00, 63.24it/s]

98%|█████████▊| 982/1000 [00:15<00:00, 61.50it/s]

99%|█████████▉| 989/1000 [00:15<00:00, 61.37it/s]

100%|█████████▉| 997/1000 [00:15<00:00, 62.58it/s]

100%|██████████| 1000/1000 [00:15<00:00, 64.98it/s]

Text(0, 0.5, 'Relative label distribution')

estimator, losses = npe.train_torch_ss_estimator(

features=surrogate_features,

targets=surrogate_targets,

epochs=2_000,

)

ax = losses.plot()

ax.set_ylim(0, None)

0%| | 0/2000 [00:00<?, ?it/s]

7%|▋ | 139/2000 [00:00<00:01, 1386.03it/s]

14%|█▍ | 280/2000 [00:00<00:01, 1397.70it/s]

21%|██ | 420/2000 [00:00<00:01, 1383.91it/s]

28%|██▊ | 559/2000 [00:00<00:01, 1382.21it/s]

35%|███▍ | 698/2000 [00:00<00:00, 1381.73it/s]

42%|████▏ | 839/2000 [00:00<00:00, 1389.90it/s]

49%|████▉ | 979/2000 [00:00<00:00, 1390.74it/s]

56%|█████▌ | 1119/2000 [00:00<00:00, 1389.34it/s]

63%|██████▎ | 1259/2000 [00:00<00:00, 1389.33it/s]

70%|██████▉ | 1398/2000 [00:01<00:00, 1384.77it/s]

77%|███████▋ | 1537/2000 [00:01<00:00, 1384.12it/s]

84%|████████▍ | 1677/2000 [00:01<00:00, 1387.53it/s]

91%|█████████ | 1816/2000 [00:01<00:00, 1361.55it/s]

98%|█████████▊| 1954/2000 [00:01<00:00, 1366.57it/s]

100%|██████████| 2000/2000 [00:01<00:00, 1380.17it/s]

(0.0, 0.5140035502146929)

fig, (ax1, ax2) = plt.subplots(

1,

2,

figsize=(8, 3),

layout="constrained",

sharex=True,

sharey=False,

)

ax = sns.kdeplot(surrogate_targets, fill=True, ax=ax1)

ax.set_title("Prior")

posterior = estimator.predict(surrogate_features)

ax = sns.kdeplot(posterior, fill=True, ax=ax2)

ax.set_title("Posterior")

ax2.set_ylim(*ax1.get_ylim())

plt.show()

Inverse parameter sensitivity¶

_ = plot.heatmap(inverse_parameter_elasticity(estimator, surrogate_features.iloc[0]))

elasticities = pd.DataFrame(

{

k: inverse_parameter_elasticity(estimator, i).loc["vmax_2b"]

for k, i in surrogate_features.iterrows()

}

).T

_ = plot.violins(elasticities)